Видео с ютуба Local Llm

What is Ollama? Running Local LLMs Made Simple

If you don’t run AI locally you’re falling behind…

THIS is the REAL DEAL 🤯 for local LLMs

All You Need To Know About Running LLMs Locally

Learn Ollama in 15 Minutes - Run LLM Models Locally for FREE

How To Run Private & Uncensored LLMs Offline | Dolphin Llama 3

Настройте свой собственный сервер LLM дома | Запускайте локальные модели ИИ с помощью Ollama и NV...

4 levels of LLMs (on the go)

Я получил самую маленькую (и глупую) степень магистра права

Let's Run Local AI Kimi K2 Thinking - Chart Topping 1 TRILLION Parameter Open Model

The Ultimate Guide to Local AI and AI Agents (The Future is Here)

Ollama vs Private LLM: Llama 3.3 70B Local AI Reasoning Test

Private & Uncensored Local LLMs in 5 minutes (DeepSeek and Dolphin)

Build a Local LLM App in Python with Just 2 Lines of Code

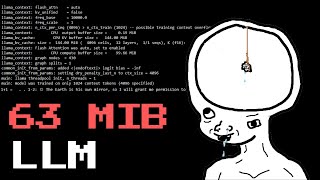

Run Local LLMs on Hardware from $50 to $50,000 - We Test and Compare!

host ALL your AI locally

run AI on your laptop....it's PRIVATE!!

Check out AnythingLLM if you’re looking for open source private local LLM for your team

Why LLMs get dumb (Context Windows Explained)